Disaggregated Prefill-Decode: The Architecture Behind Meta's LLM Serving

Why I'm Writing This Series

I've been deep in research mode lately, studying how to optimize LLM inference. The goal is to eventually integrate these techniques into JarvisLabs - making it easier for our users to serve models efficiently without having to become infrastructure experts themselves.

As I learn, I want to share what I find. This series is part research notes, part explainer. If you're trying to understand LLM serving optimization, hopefully my journey saves you some time.

This first post covers disaggregated prefill-decode - a pattern I discovered while reading through the vLLM router repository. Meta's team has been working closely with vLLM on this, and it solves a fundamental problem that's been on my mind.

The Problem: Scaling LLM Serving Gets Complicated Fast

Starting with LLM serving looks easy. Pick a framework like vLLM or SGLang, choose a model, spin it up. Done.

But the moment your traffic grows, you need more workers. And that's when simple scaling stops working. You add workers, set up load balancing - maybe round-robin, maybe random distribution. Performance improves... but not as much as you'd expect.

Why? Because there's something fundamental about how LLMs generate text that makes simple scaling inefficient.

The Two Phases of LLM Inference

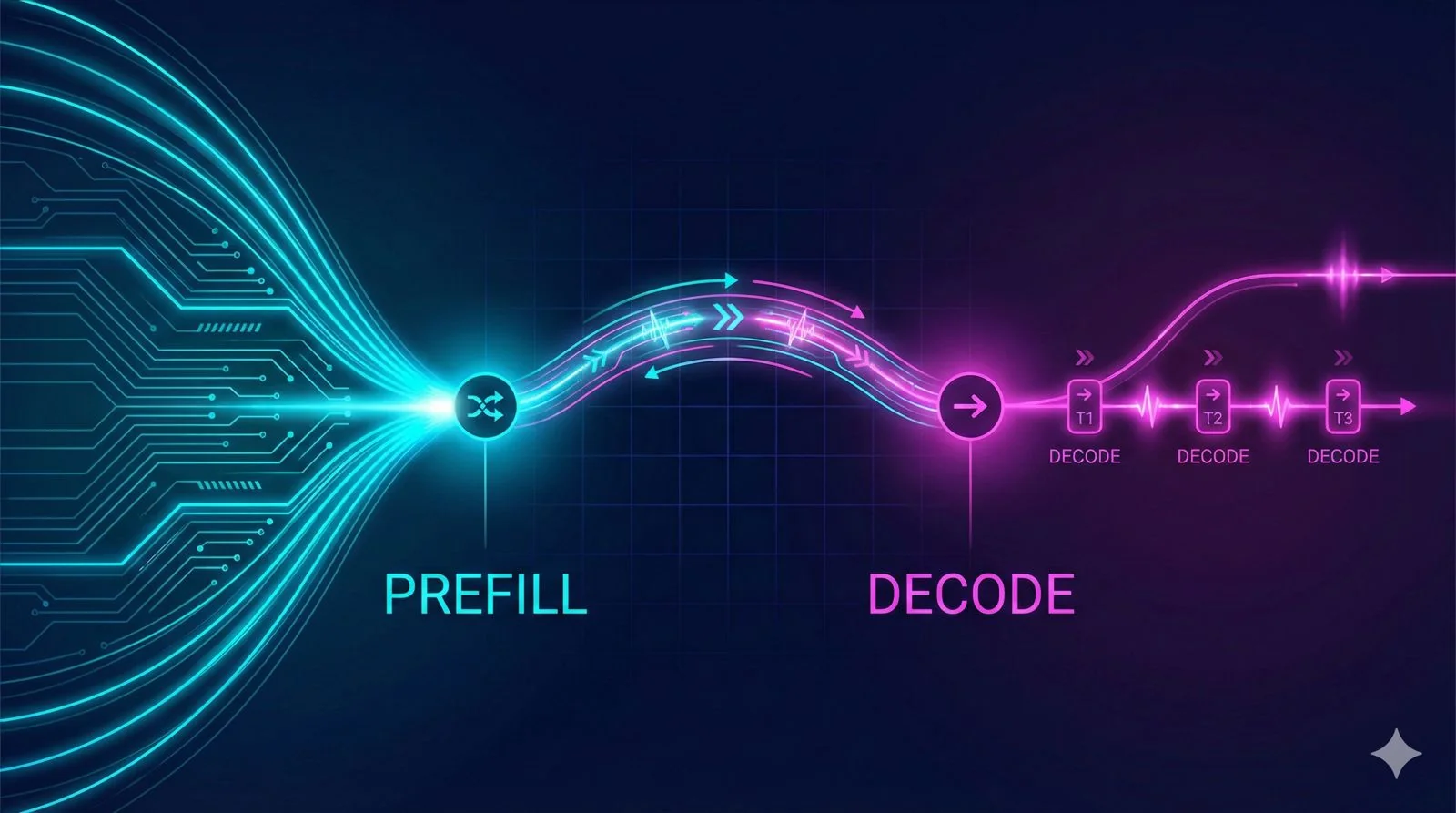

To understand the problem, you need to understand what happens inside an LLM when it processes your request. There are two distinct phases, and they behave very differently.

Phase 1: Prefill (Processing Your Prompt)

When your prompt arrives, the model processes all input tokens in parallel. This is the computationally intensive phase where we calculate the KV (Key-Value) cache for every layer in the model.

The prefill phase is compute-bound. We're doing massive matrix multiplications across all input tokens simultaneously. The GPU's tensor cores are maxed out.

Phase 2: Decode (Generating the Response)

Once the prompt is processed, the behavior changes completely. Now we generate tokens one at a time, because each new token depends on the previous one.

The decode phase is memory-bound. We're reading the KV cache and model weights repeatedly, but only computing a small amount per step. The GPU spends most of its time waiting for memory, not computing.

The Mismatch

The key insight is that these two phases have completely different resource requirements.

| Aspect | Prefill Phase | Decode Phase |

|---|---|---|

| Token processing | Parallel (all at once) | Sequential (one by one) |

| GPU bottleneck | Compute-bound | Memory-bound |

| Latency impact | Time To First Token (TTFT) | Inter-Token Latency (ITL) |

| Batch efficiency | High | Low |

When you run both phases on the same GPU, they compete for resources. A long prefill blocks decode steps for other requests. Decode operations fragment GPU utilization during prefill.

The Standard Approach and Its Limitations

In a typical vLLM deployment, each worker handles everything:

The problems:

- Prefill interference: A 4,000-token prompt blocks decode steps for other requests

- Decode fragmentation: Many small decode operations can't utilize GPU compute efficiently

- Scheduling complexity: The scheduler must balance two very different workloads

- Scaling limitations: You can only scale workers as a unit, not by phase

Adding more workers with load balancing helps, but you're still mixing workloads on each worker. You're not solving the fundamental resource contention.

Disaggregated Prefill-Decode: Meta's Solution

The idea is straightforward: separate the phases onto different workers.

How It Works

Step 1: Prefill

- Request arrives at the router

- Router sends it to a prefill worker

- Prefill worker processes the entire prompt and builds the KV cache

Step 2: Handoff

- Router notifies an available decode worker

- Decode worker connects directly to the prefill worker

- KV cache transfers from prefill to decode worker

Step 3: Decode

- Decode worker generates tokens sequentially

- Uses the transferred KV cache

- Streams response back to client

Why This Works

1. Independent Scaling

You can scale each phase based on your actual workload:

2. Workload-Specific Optimization

Each worker type can be optimized for its task:

| Optimization | Prefill Worker | Decode Worker |

|---|---|---|

| Batch size | Large batches | Small batches |

| GPU type | High compute (H100) | High memory bandwidth |

| Scheduling | Batch similar-length prompts | Continuous batching |

| Memory | Temporary KV cache | Persistent KV cache |

3. No More Interference

Prefill operations never block decode. Decode never fragments prefill compute. Clean separation.

Real-World Performance Gains

This isn't just theory. Research teams and production deployments have published results:

| System | Key Result | Source |

|---|---|---|

| DistServe | 7.4x more requests served, 12.6x better SLO compliance | OSDI 2024 |

| Splitwise | 2.35x throughput with same resources, or 1.4x throughput at 20% lower cost | Microsoft Research |

| Mooncake | 525% improvement with KV cache disaggregation | Moonshot AI |

| SGLang + DeepSeek-R1 | 52.3k input tokens/s, 22.3k output tokens/s on 96 H100s | SGLang Blog |

The pattern is consistent: separating prefill and decode delivers 2-7x gains that traditional architectures can't match.

Meta, LinkedIn, Mistral, and HuggingFace are already running vLLM with disaggregated serving in production. NVIDIA announced Dynamo at GTC 2025 specifically for this pattern.

The Hard Part: KV Cache Transfer

The disaggregated approach sounds great, but there's a massive challenge: how do you move the KV cache fast enough?

Let's Do the Math

For a Llama-3.1-70B model:

KV cache per token:

├── 80 layers

├── 8 KV heads per layer

├── 128 dimensions per head

├── 2 (K and V)

├── 2 bytes (FP16)

└── = 80 × 8 × 128 × 2 × 2 = 327,680 bytes per token

For a 4K token prompt:

└── 4,096 × 327,680 = 1.34 GB of KV cache

1.34 GB per request. And this needs to transfer fast enough that the decode worker isn't sitting idle waiting.

Network Requirements

For a responsive system, TTFT should be under 500ms. If prefill takes 200ms, you have ~300ms for KV cache transfer.

Required bandwidth = 1.34 GB / 0.3 seconds ≈ 4.5 GB/s minimum

Let's see how different networks stack up:

| Network Type | Bandwidth | Transfer Time (1.34 GB) |

|---|---|---|

| 1 GbE | 125 MB/s | 10.7 seconds |

| 10 GbE | 1.25 GB/s | 1.07 seconds |

| 25 GbE | 3.125 GB/s | 0.43 seconds |

| 100 GbE | 12.5 GB/s | 0.11 seconds |

| InfiniBand HDR | 25 GB/s | 54 ms |

| NVLink (within node) | 600 GB/s | 2.2 ms |

The takeaway: Internet connections won't work. You need workers on the same local network with high-speed interconnects - ideally InfiniBand or NVLink.

Implementation: vLLM Router

Meta's team has implemented this in the vLLM router, building on SGLang's router work.

Key Features

Smart Request Routing: Classifies requests by input token count, expected output length, and current worker utilization.

Direct Worker Communication: After prefill, the decode worker connects directly to the prefill worker for KV cache transfer - bypassing the router to minimize latency.

Health Monitoring: Tracks GPU utilization, KV cache memory usage, queue depths, and transfer throughput.

What This Means for JarvisLabs

Reading about this architecture made me realize something: the current JarvisLabs infrastructure wasn't built for this pattern - because this pattern didn't exist when we built it.

The Traditional Cloud Model

Most GPU cloud platforms (including JarvisLabs today) assume workers are independent:

Workers communicate across the internet. This works fine for independent requests, but KV cache transfer over internet? Way too slow.

What Disaggregated Inference Needs

The requirements:

- Co-located workers: Same rack, same datacenter zone

- High-speed interconnect: InfiniBand, NVLink, or at minimum 100GbE

- Shared memory architecture: For even faster transfers within a node

- Coordinated scheduling: Workers need to be aware of each other

The Evolution We Need

| Current Model | Future Model |

|---|---|

| Isolated instances | Interconnected worker pools |

| Internet networking | High-speed fabric networking |

| Pay per GPU | Pay per inference unit |

| User manages scaling | Platform-managed autoscaling |

This is one of the things I'm researching for JarvisLabs. It's not a simple software update - it requires infrastructure-level changes. But Meta, NVIDIA, and others are already building for this.

When Should You Use This?

Disaggregated prefill-decode isn't always the right choice.

Good Use Cases

- High-throughput production serving: Many concurrent requests, need consistent latency

- Variable prompt lengths: Mix of short and long prompts, summarization, RAG

- Strict SLAs: Requirements on TTFT and ITL that standard serving can't meet

Not Worth the Complexity For

- Development and testing: Low request volume, standard vLLM is fine

- Small models: KV cache is small enough that transfer isn't a bottleneck

- Batch processing: TTFT doesn't matter, just throughput

Questions I'm Still Exploring

As I dig deeper into this, a few things I'm thinking about:

Can we use different GPU types for prefill vs decode?

Since prefill is compute-bound and decode is memory-bound, it seems like we could use high-compute GPUs (H100) for prefill and cheaper or older GPUs with good memory bandwidth for decode. This could significantly reduce costs. I haven't found benchmarks on this yet.

Does this make sense for smaller models?

If the model is small enough to run multiple workers on the same server, KV cache transfer becomes nearly instant (NVLink speeds). This might make disaggregation viable even for 7B-13B models where the complexity would otherwise not be worth it.

Implementation detail: ZeroMQ in vLLM

Meta's internal implementation uses custom connectors for KV cache transfer. The open-source vLLM router uses ZeroMQ (0MQ) instead. I'm curious about the performance difference and whether this becomes a bottleneck at scale.

Conclusion

Disaggregated prefill-decode changes how LLM serving architecture works. By separating the compute-heavy prefill phase from the memory-heavy decode phase:

- Each phase scales independently

- Workers optimize for their specific workload

- No more interference between concurrent requests

- Better latency and throughput overall

The catch: it requires high-speed networking between workers. This is pushing the entire industry - cloud platforms included - toward new infrastructure patterns.

For production workloads with high traffic and strict latency requirements, this architecture is worth exploring. The vLLM router provides an open-source starting point.

For most development scenarios, standard vLLM is still the right choice. Know your requirements, measure your bottlenecks, and optimize accordingly.

I'll keep sharing what I learn. If you have questions or want to discuss LLM optimization, feel free to reach out.

Related Articles

If you're exploring LLM optimization, these posts cover other techniques we've benchmarked:

- Speculative Decoding with vLLM - Using draft models to speed up token generation by 1.4-1.6x

- The Complete Guide to LLM Quantization - AWQ, GPTQ, Marlin, and more - benchmarked for quality and speed

References

Code & Implementations

- vLLM Router - Meta's disaggregated inference implementation

- SGLang - The original router implementation

- vLLM Documentation

Papers

- Splitwise: Efficient Generative LLM Inference Using Phase Splitting - Microsoft's approach

- DistServe: Disaggregating Prefill and Decoding for Goodput-optimized LLM Serving - Academic analysis

- Mooncake: A KVCache-centric Disaggregated Architecture - Moonshot AI's KV cache approach