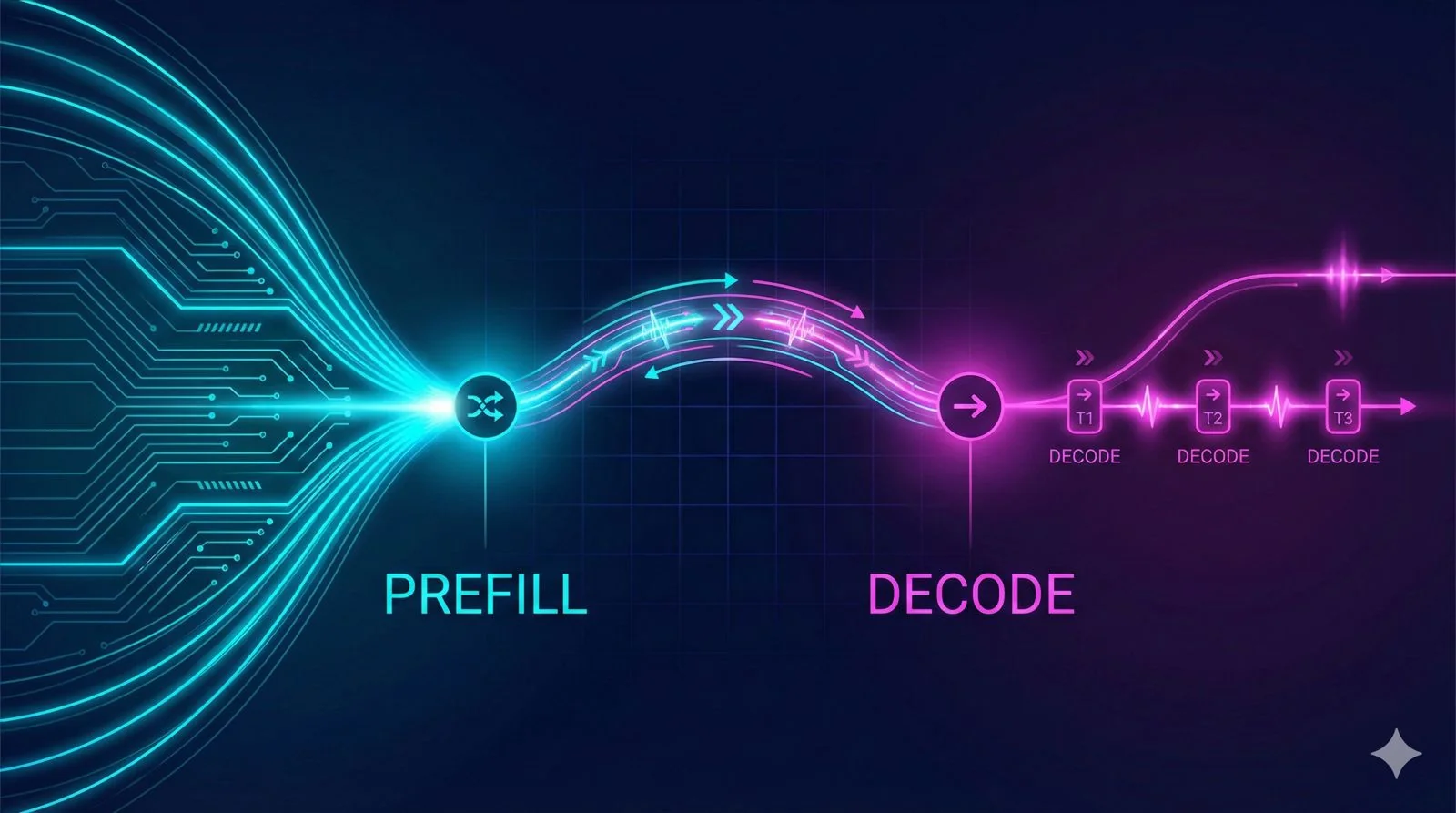

Disaggregated Prefill-Decode: The Architecture Behind Meta's LLM Serving

Part 1 of my LLM optimization research series. Exploring how Meta's disaggregated prefill-decode strategy separates prompt processing from token generation - and what it means for JarvisLabs.