How to Deploy and Connect with Ollama LLM Models: A Comprehensive Guide

In this blog, we will explore how to

In this blog, we will explore how to

- Pull an LLM model in Ollama

- Chat through command line

- Connect with a local client

- Communicate with a rest API

- Check for logs

To follow on with the blog, please create an instance using framework Ollama,

- Pick a GPU - If you want to run some of the bigger models like mixtral, llama2:70b, pick a bigger GPU say with 40+ GB GPU memory. For smaller models like llama2b, GPUs with 16 - 24 GB GPU VRam would suffice.

- Storage - For larger models choose 100 - 200GB.

Pull an LLM model

Once the instance is launced, you can pull a model from

- Terminal

- API

For complete list of models check here.

Terminal

You can open a terminal from the Jupyter Lab or by ssh to your instance. From the terminal run the below command.

ollama pull mixtral

API

You can also connect to the API through its API endpoint. You can make an API call similar to the below call.

curl https://******.jarvislabs.net/api/pull -d '{

"name": "mixtral"

}'

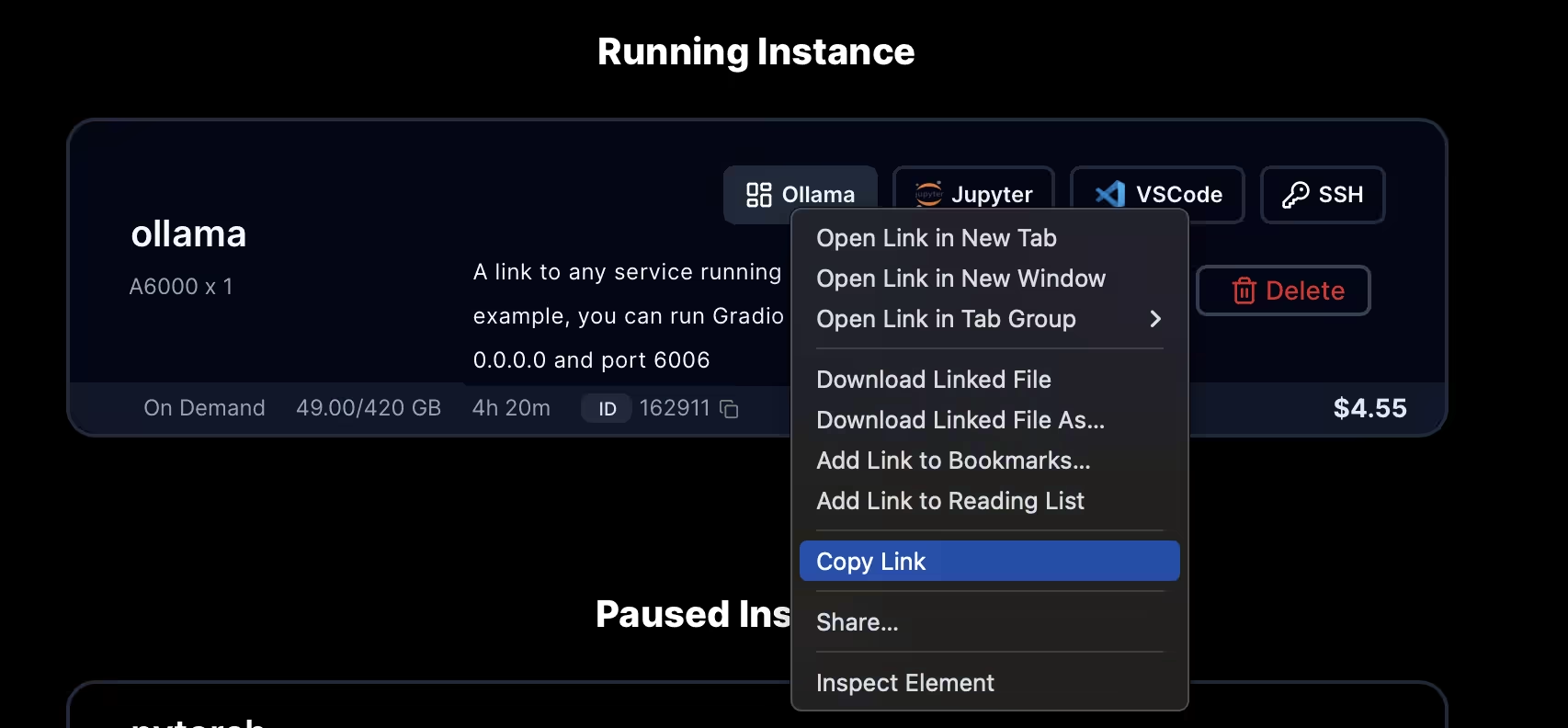

You can find the API endpoint URI for your instance from here.

Note

While downloading the model, if you notice that the process becomes extremely slow then kill the process by pressing Command + C / Ctrl + C, and try again. There is a known issue with Ollama, and hopefully it will be fixed in the upcoming releases.

Chat through using command line

Now we can connect from a terminal on our local instance and interact with the model. Without worrying about the GPU fans or the hot air 😀

export OLLAMA_HOST=https://******.jarvislabs.net/

#Lists all the downloaded models

ollama list

#Replace mixtral with your favourite model

ollama run mixtral

Use a local Graphical interface

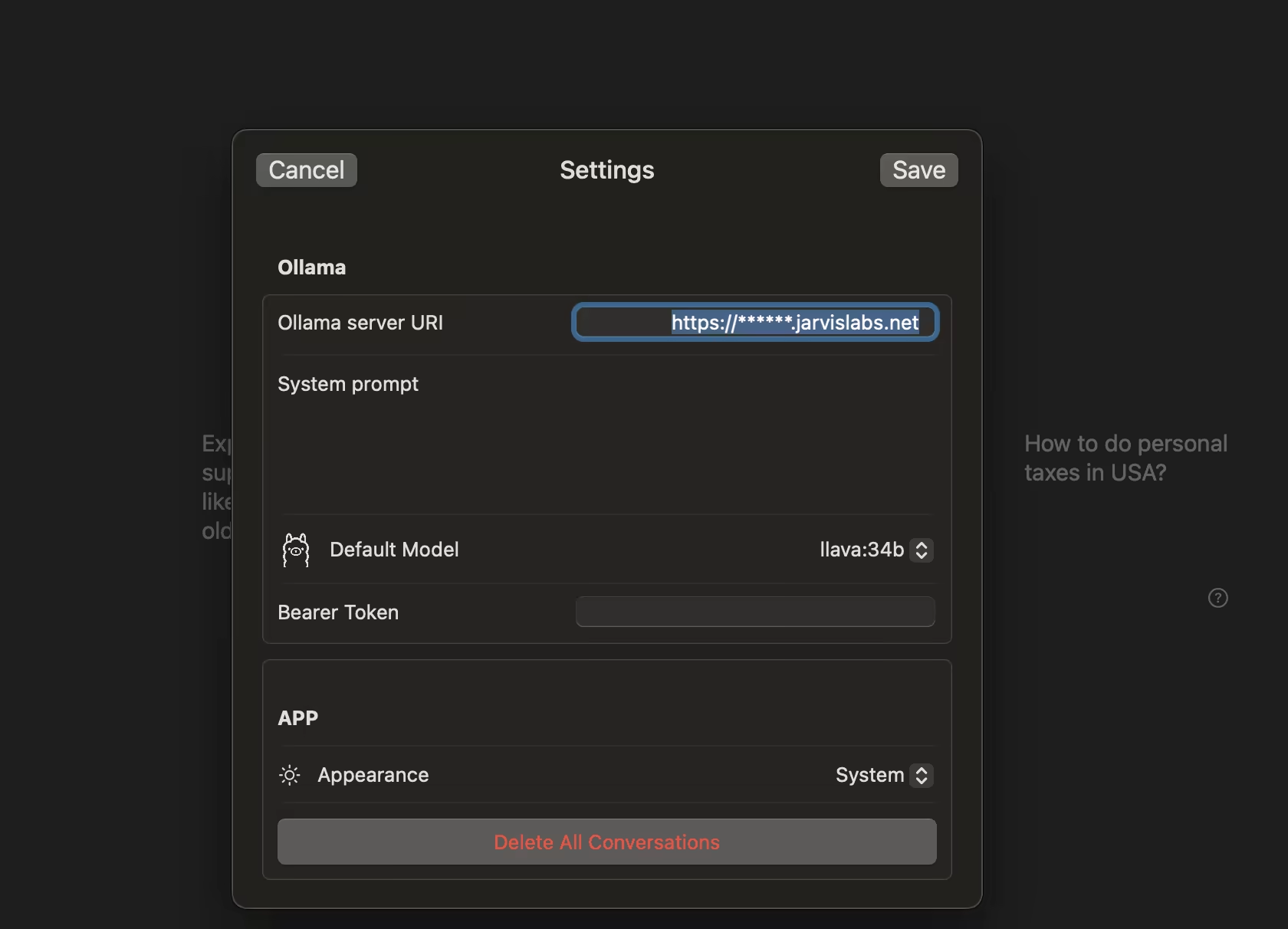

If you are used to an interface like ChatGPT then you can choose an app available for your OS. For Mac, I tried Enchanted and I liked it. You can directly download it from app store. Pass the API endpoint under the settings - Ollama Server URI

Using API

We can also communicate with Ollama and our models with a simple API. Pretty useful while building apps.

Example:

curl https:******.jarvislabs.net/api/generate -d '{

"model": "mixtral",

"prompt":"Why is LLMs so much fun",

"stream": false

}'

Ollama serve logs

On linux servers, we can start running the

ollama serve

All of our above operations was interacting with it. The logs are placed under /home/ollama.log, which could be useful for any debugging process.